CTOs view on a defensible moat in the age of generative AI

Deconstructing the generative AI landscape to build a defensible moat

There is a lot of talk about generative AI. You will see "sizzle" feature after "sizzle" feature as the world wakes up to the state of modern AI. The exploration of AI will manifest as a tsunami of experiments, some with long-term value, many without. This poses a difficult question for CTOs and executives: how to make long-term investments and ensure that they are building a unique and defensible moat?

The Impact of Generative AI on TravelPerk

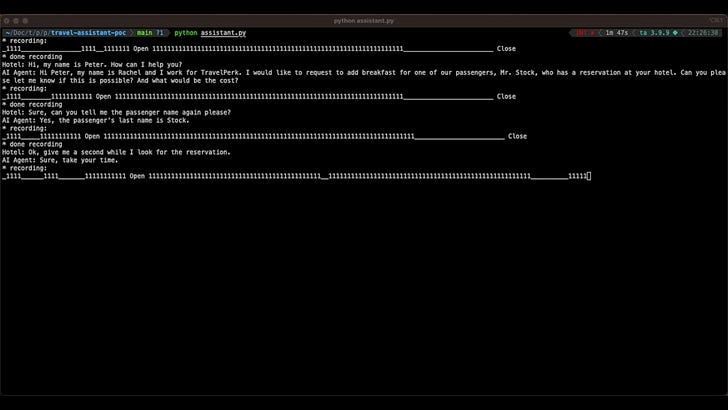

At TravelPerk, you will already feel the transformative impact of AI on our roadmap. From the semantic analysis of emails and "better than human" levels of triage and routing to ingesting complex invoices or helping our companies with their account setup, generative AI is flowing through our entire roadmap and creating beautifully magical touchpoints everywhere you look. We recently shared this implementation, you can easily see this is just the beginning.

The Power of Combining AI Services

The real power in this technology comes from seamlessly weaving multiple different models and services together, handing context between each of them as you use the AI best suited for whatever subtask you need.

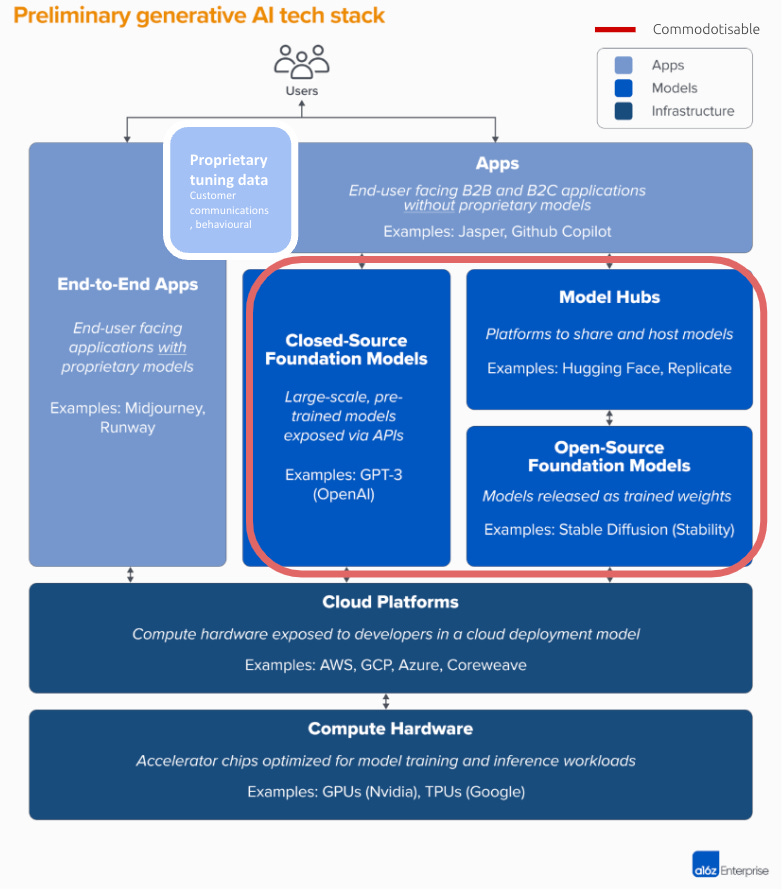

Where is the long-term moat behind all this, and how should you start to think about it? Our engineering leadership found this overview from a16z a helpful framing. I've made some modifications of my own to the diagram, demarcating the areas that are most likely to become rapidly commoditized and are currently undergoing the most unpredictable development and iteration.

We can break this diagram down into two broad buckets:

Apps:

Most of what we see on our LinkedIn feeds sits in that top layer, the "apps", where you are gluing services and doing some basic fine-tuning on other models.

The overview from a16z is a little high-level. The distinction between an ‘end-to-end app’ and an app we suspect will be more blurred. Proprietary end-to-end models will likely be a collection of hundreds of fine-tuned, optimized, and well-composed models (and indexes of large bodies of data) that are orchestrated to output results that on aggregate dramatically outperform the larger models.Open / Closed Sourced models:

The quality of models, both closed and OS are evolving at breakneck speed. In weeks, not years sizeable changes are taking place.

New techniques like LoRA are making it really easy to build and host models that are really good at targetted tasks (and starting to catch up to ChatGPT in terms of performance), with pretty tiny data sets and a laptop. This is going to lead to an explosion of lots of niche models for very specific jobs.

Investing in data and positioning for a fast-moving external world

How do you ensure you are going to transition through as an AI-native player with a moat and not simply build on shared foundations that will ultimately be available to everyone? From all this, we have started to build a few early conclusions on the direction of this space:

The quality of models, both closed and open-source, are evolving at breakneck speed. In weeks, not years, sizable changes are taking place. Betting on a single provider is a mistake. When you add the risk of regulation and the unpredictable response from various governments the issue compounds.

Data quality not GPU resources will be the most accessible moat for most organizations. Building ML ops teams and pipelines that make it simple for anyone to train models that can outperform major models in targeted domains and use cases, will be what the most successful organizations achieve.

Composability is a moat. This giant 'decoupling' will mean that end-to-end apps of the future compose hundreds and hundreds of models, both in-house and externally hosted. We for example foresee fairly extensive investment in our PII architecture and privacy around this technology. Which will mean we want self-hosted models that help us with PII scrubbing and data governance before we leverage externally hosted infrastructure. Being intentional even at this stage about breaking that out is critical.

Practical Steps for Embracing AI in Your Architecture

Embrace the unbundling:

Bake abstraction in. We are starting to leverage LangChain and other abstraction layers that let the teams work above any single model.Remain model-agnostic:

Operate under the assumption that within 24 months, there will be 10 alternatives to OpenAI, and your hand will be forced to use models based on their geography and regulatory adherence. Try to remove dependencies where possible.Focus on fewer, better data:

If you can get your telemetry right, you will start to see what steps in your chains are not performing as you would like. To tune proprietary models, large corpuses of data will be less useful than small, highly curated data sets, giving you outsized returns.Emphasize composability:

Like writing a book requires many different steps and phases, you will want a model to help you with the outline, a different one to perform a deep analysis on historically accurate character profiling, another for creative flair, and so on.

Conclusion

In the age of generative AI, building a defensible moat for your business is crucial. By focusing on the right areas of investment, remaining model-agnostic, and leveraging the power of composability, your company can stay ahead of the competition and thrive in this rapidly evolving landscape. By embracing these principles, you can ensure that your business remains at the forefront of AI innovation, creating unique and lasting value for your users.